ProseraPod - Ask Industry Expert Series

A mini series where we dive deep into key industry questions and provide expert insights.

Ask Greg McMillan - What role do you see dynamic simulation playing in the future of best kiln and calciner temperature control?

Ask Greg McMillan

We ask Greg:

What role do you see dynamic simulation playing in the future of best kiln and calciner temperature control?

Greg's Response:

In kilns and calciners, there are typically four zones. First is a drying zone of constant temperature, followed by a heating zone of rising temperature and a reaction zone of constant temperature, and finally a burning zone characterized by rapid rise and slow fall of temperature. The energy supplied in the drying and reaction zones are used to evaporate moisture and supply the heat to drive the endothermic reaction, respectively.

The basic loop arrangement for kilns and calciners would use outlet temperature to adjust the heat pulled (e.g., air flow) through the drum and inlet temperature to adjust the heat input (e.g., fuel flow). Note that the outlet air temperature loop has reverse action where an increase in temperature will cause a decrease in air flow. The outlet loop is not tuned as aggressively as the inlet loop. At steady-state or for small upsets, the interaction is acceptable. However, large upsets require the use of feedforward or decouplers to prevent excessive cycling.

Throughput can be maximized, by the addition of valve position controller to gradually trim the outlet temperature set point and the reaction point along the drum to keep the fuel valve at its largest controllable position. At absolute gas temperatures above 1100 kelvins, the heat transfer is almost entirely by radiation, and the gas temperature is subsequently determined by excess combustion air. Adjusting the fuel or excess air will affect the excess air and change the heat transfer to the reaction and burning zones. The convective heat transfer to the cooler zones then depends upon the amount of heat left after radiation to the hotter zones.

An innovative control strategy can stabilize lime kiln control by a fast oxygen loop to control excess air by manipulating the forced air damper. The hot end temperature loop manipulates the oxygen set point, and the cold end temperature loop manipulates the fuel input. A feedforward signal from the fuel valve position that represents the air-to-fuel ratio is used to assist the oxygen loop. The feedforward signal must include the fuel valve and the air damper installed characteristics. The kiln pressure set point is trimmed based on fuel input to set the flame length.

Simulations that include operating conditions and equipment design with Digital Twin can help find and confirm the best control strategies.

For much more knowledge, see the ISA book Advanced Temperature Measurement and Control, Second Edition (use promo code ISAGM10 for a 10% discount on Greg’s ISA books).

Ask Ron Besuijen - How do focus and stress management enhance problem-solving?

Ask Ron Besuijen

We ask Ron:

How do focus and stress management enhance problem-solving?

Ron's Response:

In high-pressure situations, even the best technical knowledge is of little use without focus and the ability to manage stress effectively. Simulation training helps operators develop these essential skills by:

- Teaching Prioritization: In a high-stakes scenario, operators must quickly determine what needs immediate attention. Through repeated exposure to complex situations, simulation training helps operators practice prioritizing tasks and managing competing demands. As Dave Strobhar aptly puts it, “Sometimes a move has to be good enough, and you have to move on.”

- Avoiding Fixation: It’s easy to get stuck on one problem or explanation, especially under stress. Simulation training teaches operators to stay flexible, exploring alternative possibilities and keeping their perspective broad.

- Stress Management Techniques: Techniques like tactical breathing, self-talk, and visualization are incorporated into training, helping operators stay calm and maintain clarity under pressure.

Familiarity breeds confidence, and simulation training minimizes the fear of the unknown by allowing operators to face and overcome challenges repeatedly. This confidence shifts stress from being debilitating to becoming a focusing tool—helping operators channel their energy toward solving the problem at hand.

The strategy for a trip of a large compressor in one petrochemical facility is you have one chance for a quick restart, which means only a short interruption of production. A problem while testing the auto starts on a seal oil pump caused a trip and during the restart the machine failed to move through the compressor critical speeds fast enough, returning the speed to slow roll. Because the operator had trained for this scenario on the simulator, he felt comfortable trying one more restart and was successful. This prevented a day of lost production.

With consistent practice, operators learn to embrace stress as a challenge rather than a threat. This mental shift transforms them into resilient problem-solvers, capable of thriving in even the most demanding situations.

Ask Julie Smith - What strategies have you found effective for starting small, low-risk simulation projects to build credibility and trust?

Ask Julie Smith

We ask Julie:

What strategies have you found effective for starting small, low-risk simulation projects to build credibility and trust?

Julie's Response:

Back in 2019, the new DuPont was formed after the merger and split with Dow. Many sites were struggling to continue their migration projects to move away from the Dow proprietary MOD system. There were also a few cases where shared site infrastructure (utilities, raw materials, etc.) was changing ownership. At one site, we found a case where both of these were happening simultaneously for a highly hazardous material. We convinced the process automation leader to use this case as a pilot to show what our simulation technology can do. It was small enough in scope (less than 200 I/O) that the risk to the project was low; they could always revert to a tie-back version if nothing worked as promised.

Instead, we exceeded expectations. In fact, the more robust simulation helped to identify a design deficiency that would have caused potential problems, allowing the site to address proactively before taking ownership of the asset. We then proceeded to simulate the core processes in support of their migrations over the next 5 years, saving many millions of dollars and ensuring a safe transition.

Ask Greg McMillan - What role do you see dynamic simulation playing in the future of best vessel temperature control?

Ask Greg McMillan

We ask Greg:

What role do you see dynamic simulation playing in the future of best vessel temperature control?

Greg's Response:

A vessel is defined here as storage, recycle, blend, or surge volume where there is no heat of reaction and no agitator. Fortunately, most vessel volumes are so large that the process time constant is extremely large and, consequently, the rate of change of temperature is exceptionally slow. This is fortunate because the only mixing typically provided in storage and feed tanks is provided by incoming flows (e.g., feed and recirculation) and convective currents. The addition of eductors and the location of dip tubes or spargers can greatly reduce the loop dead time magnitude. Ideally, thermowells should be installed in the elbows of recirculation lines. If they must be installed in the vessel, they should be in areas where the fluid velocity is largest, to reduce the measurement time constant, but farthest away from steam spargers, to avoid erratic nonrepresentative temperature readings. Storage and feed tank temperature loops often have a process time constant so large that a high controller gain can be used despite a high process dead time from poor mixing.

For throttling of coolant flow to coils or jackets of small vessels with a continuous discharge flow, the same concerns described for heat exchangers about a limit cycle from excessive dead time and process gain at low flow exist. Therefore, gain scheduling or signal characterization are useful techniques. Also, a feedforward signal can be computed, based on a simple energy balance as was done for the heat exchanger. A better solution is to keep a high constant coil or jacket recirculation flow and use a coil or jacket inlet secondary temperature control manipulate a makeup coolant flow to inlet with pressure control manipulation of return flow from coil or jacket outlet. When heating is required, steam flow to a steam injector in a high flow coil or jacket recirculation line manipulated by inlet temperature control provides the best dynamics. For vessel zero discharge flow, set point profiles and proportional-plus-derivative (PD) controllers (like those used on batch reactors) can help prevent overshoot.

Simulations that include operating conditions and equipment design with Digital Twin can help find and confirm the best control strategies.

For much more knowledge, see the ISA book Advanced Temperature Measurement and Control, Second Edition (use promo code ISAGM10 for a 10% discount on Greg’s ISA books).

Ask Ron Besuijen - What role does experience play in effective problem-solving?

Ask Ron Besuijen

We ask Ron:

What role does experience play in effective problem-solving?

Ron's Response:

Pattern recognition and tacit knowledge—the instincts gained through experience—are indispensable for problem-solving. But how do you cultivate these skills in a controlled environment? Simulation training provides the perfect solution:

- Dynamic Mental Networks: Simulators allow operators to build mental representations of how systems behave under various conditions. By repeatedly engaging with these systems, operators develop an intuitive understanding of how to anticipate and respond to changes. They also learn to validate that their responses were effective or need adjusting.

- Recognizing Anomalies: The ability to spot subtle deviations is critical in preventing issues before they escalate. Simulation training sharpens this skill by exposing operators to diverse scenarios, helping them notice and interpret early warning signs.

- Applying Action Scripts: Experience breeds efficiency. Simulators enable operators to test and refine action scripts—quick, informed responses based on past experiences. This creates a toolkit of proven strategies for handling real-world challenges. Practicing emergency procedures on a simulator trains operations to quickly execute critical steps without hesitation.

In a recent upset an experienced operator assisted at the console and quickly gathered the plant status. Determining that a PSV was about to lift and the current reduction in rates was not sufficient, he asked that the rates be reduced by one third using an application. This intervention likely prevented several days of lost production.

Simulation training accelerates the accumulation of tacit knowledge. It gives operators the chance to repeatedly encounter and resolve complex problems, embedding lessons that would otherwise take years of real-world experience to learn. This training transforms knowledge into instinct, enabling operators to act quickly and decisively when it matters most.

In the posts that follow, we’ll dive deeper into the remaining perspectives, illustrating how simulation training transforms how operators approach challenges. Together, these elements foster growth, build expertise, and enable resilience in even the most dynamic and high-pressure situations. Let’s explore how simulation training goes beyond technical skills to shape a mindset that’s ready for anything.

Ask Greg McMillan - What role do you see dynamic simulation playing in the future of best heat exchanger temperature control?

Ask Greg McMillan

We ask Greg:

What role do you see dynamic simulation playing in the future of best heat exchanger temperature control?

Greg's Response:

Flows much lower than design causes a high process gain and high process deadtime that can lead to temperature oscillations and possible instability. Low velocities can cause a dramatic increase in fouling of heat transfer surfaces. Flows much higher than design causes a low process gain (poor sensitivity) that can lead to wandering of the temperature and possible loss of temperature control.

When peak error or initial transient must be minimized, feedforward control should be used. A rather simple energy balance that equates heat lost from the hot side to heat gain by the cold side yields a solution. Normal operating values are used for those inputs not measured that are relatively constant. For example, if the main upset is feed flow, a measurement of this flow is required, but assumed operating conditions can be used for the inlet temperatures that are not measured. It is critical that the controlled temperature be the set point rather than the measurement to avoid positive feedback. The feedforward signal is added to the output of the feedback controller. A bias of 50% is also subtracted so that the temperature controller can make a negative correction as large as the positive correction to the feedforward signal. The manipulated flow is best achieved by means of a flow controller and a cascade of exchanger temperature to coolant or steam flow. If the temperature controller output goes directly to a control valve, signal characterization of the installed valve characteristic should be used to convert from desired flow to required valve position. The signal divider for compensation of process gain nonlinearities should be applied to the controller output before the summer.

If the exchanger outlet temperature can be adjusted, a valve position controller can be used to slowly change the temperature set point to optimize the coolant valve position that minimizes the fouling of heat transfer surfaces by prevention of low throttle positions but also maintains the process gain and reduces utility usage by the prevention of high throttle positions.

Simulations that include operating conditions and equipment design with Digital Twin can help find and confirm the best control strategies.

For much more knowledge, see the ISA book Advanced Temperature Measurement and Control, Second Edition (use promo code ISAGM10 for a 10% discount on Greg’s ISA books).

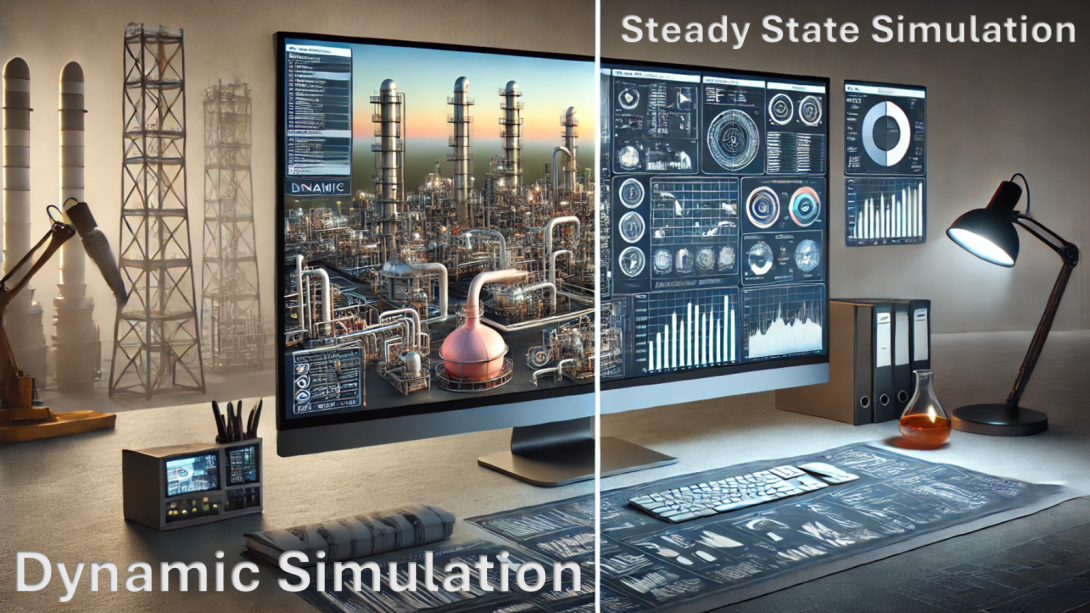

Ask Russ Rhinehart - What are some of the benefits of dynamic simulation over steady state simulation?

Ask Russ Rhinehart

We ask Russ:

What are some of the benefits of dynamic simulation over steady state simulation?

Russ' Response:

Traditionally, process design uses steady state (SS) simulation, and it serves its purpose well. Most analysis of processes and textbook instruction on how processes work use SS relations. These could be termed static models, which represent process interactions, gains, and sensitivities when the process has lined out to steady operations.

The problem is that SS models do not indicate how the process moves from an initial to a final SS. The path it takes to move from one SS to another is termed a transient, which is described by dynamic models. Dynamic models might be called time dependent, transient, or temporal models. Understanding the transient can be essential.

For instance:

- In a long pipeline, a rapid change in a flow control valve position or in switching a pump on and off can create a large temporary pressure excursion as the fluid accelerates or decelerates causing a water hammer or temporary cavitation effect.

- Changing flow rates can substantially change the transport delay in a delivery system, which may cause operators or controllers to overreact. At SS it does not matter how long the line is, but a long line can cause a confounding delay that would not be revealed by SS simulation.

- Changing tank levels can substantially change the lag-time in mixing. This could greatly amplify over and undershoot pH response when there is a delivery line after the reagent valve that continues to drip after shutoff, or needs to be filled after the valve response. SS analysis will not reveal this.

- There are continual perturbations in composition, temperatures, and flow rates of inflows to a distillation column. These may push the column into weeping or flooding situations, even though SS models using nominal conditions indicate that operation is permissible.

- A short duration of an input perturbation to a process might washout from in-process dilution and have no impact, but a longer or larger might cause a specification or operational violation.

In designing a control system, one might find a clever solution using SS responses, which may not work in practice due to the process transient responses. As much pride we might have in a technical solution, it is not the solution or the affirmation of our ability that is important. Use dynamic simulation to quantify the bottom-line benefit to the organization.

There are many benefits for using dynamic simulations to understand and test control-related functions and applications, including:

- Training operators and engineers to understand and manage the process.

- Teaching students about the fundamentals.

- Evaluating and demonstrating the economic benefit of the next level of advanced regulatory control or of model predictive control.

- Safely testing automation algorithms such as for steady-state detection, fault detection, auto tuning, getting controller models, tuning algorithms, etc.

- Safely testing process and control system design options for insensitivity to disturbances.

- Comparing control strategies for economic benefit (quality giveaway, constraint violations, waste generation, calibration error robustness, capital cost of devices, maintenance).

- Generating data to test Big Data algorithms for developing models.

I encourage you to use phenomenological models in dynamic simulators that legitimately represent your process, as opposed to FOPDT, or trivial mechanistic, or empirical black-box models.

In subsequent ProseraPods, I’ll introduce how to create your own first-principles models, how to simulate environmental vagaries, how to calibrate and validate models, and how to use the models to evaluate the various economic indicators of transient events. I hope to visit with you later. Meanwhile, visit my web site https://www.r3eda.com/ to access information about modeling, control, optimization, and statistical analysis.

Ask Ron Besuijen - How does curiosity drive a problem-solving mindset?

Ask Ron Besuijen

We ask Ron:

How does curiosity drive a problem-solving mindset?

Ron's Response:

Curiosity is the cornerstone of a problem-solving mindset. It’s about asking “why” at every opportunity—not just when something goes wrong but to understand the deeper principles at play. Simulation training nurtures this curiosity in several ways::

- Knowledge Exploration: Operators are encouraged to go beyond procedural steps, digging into the "how" and "why" of process theory. Simulators provide a platform to test these theories in real time, offering insights into dynamic system behaviors.

- Questioning Assumptions: After each scenario, operators can analyze their choices, reframe their mental models, and test alternative solutions. This reflective practice helps uncover blind spots and fosters adaptive thinking.

Think back to the last time you struggled with a problem. Somewhere you made an assumption, either an incomplete mental model of the process or a piece of critical information was missed. - Challenging Beliefs: Simulation training creates a safe space to challenge established practices. Operators can experiment with unconventional approaches and learn from unexpected outcomes, fostering innovation.

By cultivating curiosity, simulation training doesn’t just teach operators how to react—it inspires them to think critically, question norms, and seek better ways of doing things. The result? A growth mindset that empowers continuous learning and improvement.

In the posts that follow, we’ll dive deeper into the remaining perspectives, illustrating how simulation training transforms how operators approach challenges. Together, these elements foster growth, build expertise, and enable resilience in even the most dynamic and high-pressure situations. Let’s explore how simulation training goes beyond technical skills to shape a mindset that’s ready for anything.

Ask Ron Besuijen - How can simulation training cultivate a problem-solving mindset?

Ask Ron Besuijen

We ask Ron:

How can simulation training cultivate a problem-solving mindset?

Ron's Response:

To answer this question let us approach this from three different perspectives. There are three powerful perspectives that form the foundation of this mindset:

- Curiosity and Openness to Possibilities

- Pattern Recognition and Tacit Knowledge

- Focus and Stress Management

Simulation training provides a unique opportunity to develop these traits by creating a safe environment where operators can explore, make mistakes, and learn without real-world consequences. It offers the ability to pause, reflect, and try again—uncovering new insights each time.

By cultivating curiosity, pattern recognition, and stress management, simulation training equips operators with the tools they need to excel in complex environments.

In the posts that follow, we’ll dive deeper into each perspective, illustrating how simulation training transforms how operators approach challenges. Together, these elements foster growth, build expertise, and enable resilience in even the most dynamic and high-pressure situations. Let’s explore how simulation training goes beyond technical skills to shape a mindset that’s ready for anything.

Ask Greg McMillan - What role do you see dynamic simulation playing in the future of best temperature measurement installation?

Ask Greg McMillan

We ask Greg:

What role do you see dynamic simulation playing in the future of best temperature measurement installation?

Greg's Response:

Temperature RTD sensors should use 4 wires and TC sensors should use extension wires of same material as sensor with length as short as possible to minimize the effect of electromagnetic interference (EMI) and other interference on the low-level sensor signal. The temperature transmitter should be mounted as close to the process connection as possible, ideally on the thermowell.

To minimize conduction error (error from heat loss along the sensor sheath or thermowell wall from tip to flange or coupling), the immersion length should be at least 10 times the diameter of the thermowell or sensor sheath for a bare element. For high velocity streams and bare element installations, it is important to do a fatigue analysis because the potential for failure from vibration increases with immersion length.

The process temperature will vary with process fluid location in a vessel or pipe due to imperfect mixing and wall effects. For highly viscous fluids such as polymers and melts flowing in pipes and extruders, the fluid temperature near the wall can be significantly different than at the centerline. Often the pipelines for specialty polymers are less than 4 inches in diameter, presenting a problem for getting sufficient immersion length and a centerline temperature measurement. The best way to get a representative centerline measurement is by inserting the thermowell in an elbow facing into the flow If the thermowell is facing away from the flow, swirling and separation from the elbow as can create a noisier and less representative measurement. An angled insertion in can increase the immersion length over a perpendicular insertion. A swaged or stepped thermowell can reduce the immersion length requirement by reducing the diameter near the tip. Thermowells with stepped stems also provide the maximum separation between the wake frequency (vortex shedding) and the natural frequency (oscillation rate determined by the properties of the thermowell itself).

Tight fitting spring loaded sheathed sensors should be used to eliminate any air gap between the sensor sheath and the inside wall and bottom of the thermowell that dramatically increases the response time.

Simulations that include response time and velocity and composition at thermowell tip can confirm the best installation.

For much more knowledge, see the ISA book Advanced Temperature Measurement and Control, Second Edition (use promo code ISAGM10 for a 10% discount on Greg’s ISA books).

TC and RTD Best Practices

- Ensure distance from the equipment outlet (e.g. heat exchanger exit) and sensor at least 25 pipe diameters for a single phase to promote mixing (recombination of streams).

- Verify the transportation delay (distance/velocity or volume/flow) from the equipment outlet (e.g. heat exchanger exit) to the sensor is less than 4 seconds.

- Ensure the distance from the desuperheater outlet to the sensor provides a residence time (distance/velocity) that is greater than 0.2 sec

- Use a RTD for temperatures below 400 *C to improve threshold sensitivity, drift, and repeatability by more than a factor of ten compared to TC if vibration is not excessive.

- For RTDs at temperatures above 400 *C, minimize length without increasing conduction error and maximize sheath diameter to reduce error from insulation deterioration.

- Be extremely careful using RTDs at temperatures above 600 *C. A hermitically sealed and dehydrated sensor can help prevent increase in platinum resistance from oxygen and hydrogen dissociation but reliability and accuracy may still not be sufficient.

- For TCs at temperatures above 600 *C, minimize decalibration error from changes in composition of TC by best choice of sheath and TC type.

- For TCs at temperatures above 600 *C, ensure sheath material compatible with TC type.

- For TCs above temperature limit of sheaths, use the ceramic material with best thermal conductivity and design to minimize measurement lag time.

- For TCs above the temperature limit of sheaths with gaseous contaminants or reducing conditions, use possibly purged primary (outer) and secondary (inner) protection tubes to prevent contamination of TC element and still provide a reasonably fast response.

- In furnaces and kilns ensure location and design minimizes radiation and velocity errors.

- Use immersion length long enough to minimize heat conduction error (e.g., L/D > 5).

- Use immersion length short enough to prevent vibration failure (e.g., L/D < 20).

- Ensure velocity is fast enough to provide a fast response (e.g., > 0.5 fps) and is fast enough to prevent fouling for sticky fluids and solids (e.g., > 5 fps).

- For pipes, locate the tip near the centerline.

- For vessels, extend the tip sufficiently past the baffles (e.g. L/D > 5).

- For columns, extend the tip sufficiently into tray or packing (e.g. L/D > 5).

- For TC, use ungrounded junction to minimize noise.

- To increase RTD reliability, use dual RTD elements except when vibration failure is more likely due to smaller gauge in which case use redundant separate thermowells.

- To increase TC reliability, use sensors with dual isolated ungrounded junctions.

- For maximum reliability, greater intelligence as to sensor integrity and to minimize the effect of drift, use 3 separate thermowells with middle signal selection.

- Document any temperature setpoint changes made by an operator for loops with TCs so that they can be diagnosed as to possibly originating from TC drift.

- Realize the color codes of TC sensor lead and extension wires change with country ensuring drawings show correct codes and electricians are alerted to unusual codes.

- Use spring loaded sheathed TC and RTD sensor that fits tightly in thermowell to minimize lag from air acting as insulator (e.g., annular clearance < 0.02 inch).

- If an oil fill is used minimize thermowell lag, ensure tars or sludge at high temperature do not form in thermowell and tip is pointed down to keep oil fill in tip.

- Use Class 1 element and extension wire to minimize TC measurement uncertainty.

- Use Class A element and 4 lead wires to minimize RTD measurement uncertainty.

- Use integral mounted temperature transmitters for accessible locations to eliminate extension wire and lead wire errors and reduce noise.

- Use wireless integral mounted transmitters assembled and calibrated by measurement supplier to eliminate wiring errors and errors and provide portability for process control improvement and to reduce calibration, wiring installation and maintenance costs.

- Use “sensor matching” and proper linearization tables in the transmitter for primary control loops and in Safety Instrumented Systems achieving accuracy better than 1 *C.

Ask Greg McMillan - What role do you see dynamic simulation playing in the future of best temperature measurement selection?

Ask Greg McMillan

We ask Greg:

What role do you see dynamic simulation playing in the future of best temperature measurement selection?

Greg's Response:

Temperature is often the most important of the common measurements because it is an indicator of process stream composition and product quality. Temperature measurements are also essential for equipment protection and performance monitoring.

In the process industry 99% or more of the temperature loops use thermocouples (TCs) or resistance temperature detectors (RTD). The RTD provides sensitivity (minimum detectable change in temperature), repeatability, and drift that are an order of magnitude better than the thermocouple (Table 1). Sensitivity and repeatability are 2 of the 3 most important components of accuracy. The other most important component, resolution, is set by the transmitter. Drift is important for controlling at the proper setpoint and extending the time between calibrations. Operators often adjust setpoints to account for the unknown drift. When the TC is calibrated or replaced, the modified set point no longer works. The RTD is also more linear and much less susceptible to electro-magnetic interference. The supposed issue of a slightly slower sensor response will be addressed in the next post on how to get the best installation.

Thermistors have seen only limited use in the process industry despite their extreme sensitivity and fast (millisecond) response, primarily because of their lack of chemical and electrical stability. Thermistors are also highly nonlinear but this may be addressed by smart instrumentation.

Optical pyrometers are used when contact with the process is not possible or extreme process conditions cause chemical attack, physical damage, or an excessive decalibration, dynamic, velocity, or radiation error of a TC or RTD.

Simulations that include sensitivity, repeatability, drift, and EMF noise can show the advantage offered by the RTD.

For much more knowledge, see the ISA book Advanced Temperature Measurement and Control, Second Edition (use promo code ISAGM10 for a 10% discount on Greg’s ISA books).

Criteria | Thermocouple | Platinum RTD | Thermistor |

Repeatability (°F) | 1 - 8 | 0.02 - 0.5 | 0.1 - 1 |

Drift (°F/yr) | 1 - 20 | 0.01 - 0.1 | 0.01 - 0.1 |

Sensitivity (°F) | 0.05 | 0.001 | 0.0001 |

Temperature Range (°F) | –200 - 2000 | –200 - 850 | –150 - 300 |

Signal Output (volts) | 0 - 0.06 | 1 - 6 | 1 - 3 |

Power (watts at 100 ohm) | 1.6 x 10–7 | 4 x 10–2 | 8 x 10–1 |

Minimum Diameter (inches) | 0.4 | 2 | 0.4 |

Ask Ron Besuijen - How can simulators be used to train operations and test Anti-Surge controllers to prevent surge conditions in centrifugal compressors?

Ask Ron Besuijen

We ask Ron:

How can simulators be used to train operations and test Anti-Surge controllers to prevent surge conditions in centrifugal compressors?

Ron's Response:

Let us first look at a definition from Kumar Dey “Centrifugal compressor surge is a characteristic behavior of the compressor that occurs in situations when inlet flow is reduced, and the compressor head developed is so low that it cannot overcome the pressure at the compressor discharge. During a centrifugal compressor surge situation, the compressor outlet pressure (and energy) reduces dramatically which causes a flow reversal within the compressor”. This is considered to be a very dangerous and detrimental phenomenon as it results in compressor vibration that results in the failure of the compressor parts.

Surge conditions have caused loss of efficiency, mechanical damage, loss of containment and loss of life. A compressor is most susceptible to surge during startup, reduced load or sudden load changes. Surge controllers can be managed by basic PID flow controllers or a dedicated controller system.

Simulators have been successfully used to test and tune dedicated controller systems before they are installed in the process. This can reduce their commissioning time and allow the unit to return to full production sooner. It will also help to validate the accuracy of the control philosophy and expedite updating the startup procedures.

Training panel operators to respond to surge conditions is also critical. Basic PID flow controllers are typically inadequate to handle large surge events and require operator intervention. This requires a large output increase on the controller that operators need to train for and be comfortable with.

Dedicated surge controllers can handle surge events more effectively. Operators will need to be trained on its functionality and how to manage instrumentation failures. There are several flow, pressure and temperature transmitters that input to the controller that can drift or fail that can impact the control system. These failures can be practiced on a simulator to train the panel operators to detect failures and respond correctly to mitigate the impact.

Simulators are an excellent tool to train operators regardless of the control system. They can also be used to test new systems to increase effectiveness and reduce commissioning time. During some upsets a panel operator may not have five minutes to choose a course of action, never mind six months.

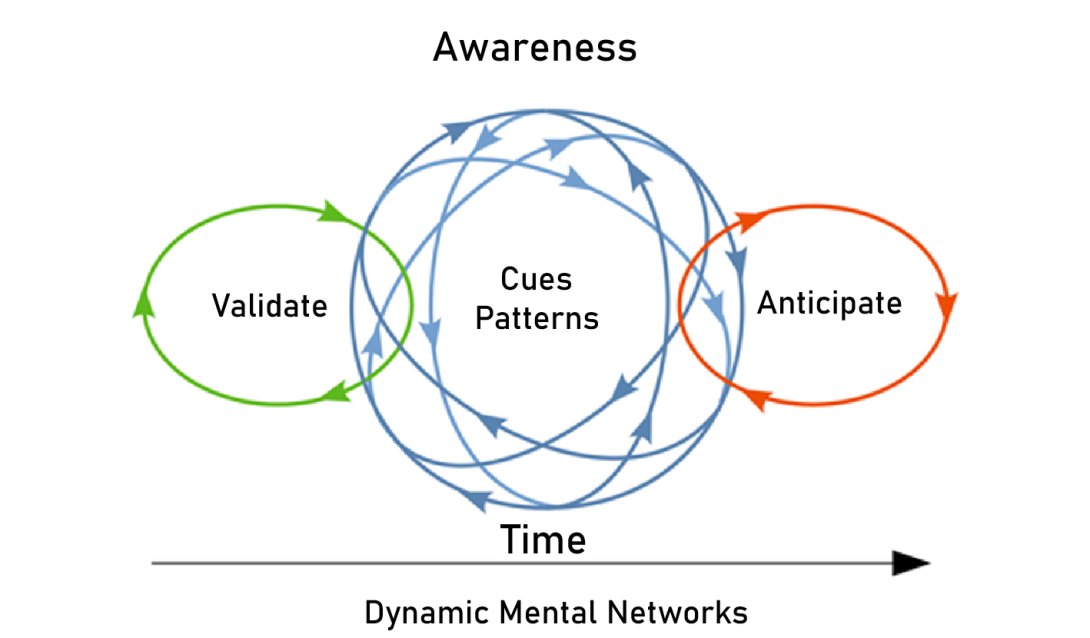

Fortunately, we have simulators to train our operators, and they do have a pause button so they can develop the skills required to pick up on cues, anticipate developments and validate their actions. Simulator training is not only about learning to complete tasks and execute procedures, but also about developing a thought process that is critical to responding to problems that have never been thought of.

The Dynamic Mental Networks concept is an attempt to further our understanding of how process operators must navigate difficult problems in a dynamic environment.

Ask Greg McMillan - What role do you see dynamic simulation playing in the future of best lime system design for pH control?

Ask Greg McMillan

We ask Greg:

What role do you see dynamic simulation playing in the future of best lime system design for pH control?

Greg's Response:

Lime feeders have a transportation delay that is proportional to the length of the feeder divided by its speed. This transportation delay may be several minutes. To eliminate the need to increase the vessel size and the agitation power, the lime rotary valve speed can be base loaded, and the pH controller can manipulate the conveyor speed or the influent flow. If the pH controller manipulates the waste flow, the dissolution time associated with an increased lime delivery rate is also eliminated. The level controller on the influent tank slowly corrects the lime rotary valve base speed if the waste inventory gets too high or low.

The dissolution time of pulverized dry lime can be greatly reduced by slaking the lime and making it into a slurry. For example, the dissolution time for pulverized dry lime of about 32 min, can be reduced as lime slurry to about 8 min. The dissolution time of lime slurry increases with age due to the agglomeration of small particles into larger particles, even though the lime slurry storage tank is mildly agitated. If the pH controller manipulates the lime feeder or water addition to the lime slurry storage tank, the equipment time constant of the slurry tank has the same effect as a slow valve time constant. To prevent adding this time constant to the loop, the lime feeder speed should be manipulated by a slurry tank level controller and the water addition rate ratioed to the lime feeder speed. The pH controller should manipulate the diluted lime slurry discharge flow that feeds the neutralization vessel. The diluted lime slurry discharge flow must be kept flowing by using a recycle loop to prevent settling and plugging in the reagent lines. A throttled globe valve will plug. The liner of a pinch diaphragm valve will fail due to erosion. Pulse width modulation of an on-off ball valve is the best alternative because it has the fewest maintenance problems and is relatively inexpensive to replace periodically.

Dynamic simulations with automation, equipment, and process dynamics including dissolution time are needed to determine the best lime system design.

For much more knowledge, see the ISA book Advanced pH Measurement and Control Fourth Edition (use promo code ISAGM10 for a 10% discount on Greg’s ISA books).

Best Practices for Reagent System Design

The time for solids to dissolve can be horrendous (minutes). Although the time for bubbles to dissolve is faster (seconds), the noise from fluctuations in concentration, besides the delay to complete dissolution, is problematic. For these reasons, using lime and ammonia for pH control is undesirable. Additional problems stem from highly viscous reagents, such as 97% sulfuric acid, that result in laminar flow in control valves and greater difficulty in mixing with process streams. Highly concentrated reagents cause extremely large reagent delivery delays due to low reagent flows (e.g., <1 gal/h). Strong acids and strong bases cause this same problem, and the additional difficulty of a steeper titration curve and the consequential need for more precise final control elements and possibly more neutralization stages. The following best practices are offered to provide more realizable reagents for pH control.

- Avoid reagents that have solids or bubbles, take more than a second to react, are highly concentrated, or are strong acids or strong bases.

- Increase the residence time in equipment and piping to provide complete dissolution of reagents and uniformity of reagents in the process stream.

- If a conveyor is used, manipulate the motor speed of a gravimetric feeder rather than the flow dumped on the conveyor inlet to eliminate the conveyor transportation delay.

- Use pressurized reagent pipelines with a coordinated isolation valve close coupled to the reagent control valve outlet to prevent process backflow into the reagent pipeline.

- Minimize the volume between the reagent valve and its injection point into the process.

- Avoid using dip tubes by injecting reagent into the feed or recirculation stream.

- Dilute reagents using tight concentration control in a recirculation stream to a diluted reagent storage tank.

- Use Coriolis mass flow meters for accurate reagent flow and concentration measurement by using a high-resolution density measurement for reagent dilution and pH flow ratio control.

Ask Ron Besuijen - How can simulation training improve operations' responsiveness to changing conditions in a dynamic environment?

Ask Ron Besuijen

We ask Ron:

How can simulation training improve operations' responsiveness to changing conditions in a dynamic environment?

Ron's Response:

Whenever an incident occurs the investigators freeze time and slowly break down the sequence of events—viewing it from all perspectives. If only we could give our process operators this amount of time and reflection of the problems they encounter. David Woods, Resilience Engineer, discusses on a NDM podcast how the operators at Three Mile Island had 10 minutes to figure out something they were never told that could happen. Then after 6 Months and $60 million, the experts determined exactly what should have been done.

Operators must be able to make decisions and then respond to what happens. They must hedge their choices and be open to revision. There is always uncertainty whether the right choice has been made in our complex dynamic processes, where one action could impact several other systems.

To graphically imagine what this might look like; I created the Dynamic Mental Network concept. We have previously discussed how picking up cues and pattern recognition can speed up problem detection and provide responses. The next challenge is to implement this in a complex, dynamic environment. When we have a complex mental model of the process, we can anticipate problems before they escalate, and we will also be able to anticipate the impact a problem will have on other systems to mitigate their effects.

Our panel operators must validate they have made the right choice while the process is in transition. Picking up on cues and patterns is a constant process to detect problems, anticipate what may be evolving, and validating that the solutions are effective. There is no pause button. During some upsets a panel operator may not have five minutes to choose a course of action, never mind six months.

Fortunately, we have simulators to train our operators, and they do have a pause button so they can develop the skills required to pick up on cues, anticipate developments and validate their actions. Simulator training is not only about learning to complete tasks and execute procedures, but also about developing a thought process that is critical to responding to problems that have never been thought of.

The Dynamic Mental Networks concept is an attempt to further our understanding of how process operators must navigate difficult problems in a dynamic environment.

Ask Greg McMillan - What role do you see dynamic simulation playing in the future of best liquid reagent dilution for pH control?

Ask Greg McMillan

We ask Greg:

What role do you see dynamic simulation playing in the future of best liquid reagent dilution for pH control?

Greg's Response:

A common misconception is that the slope of the titration curve and, therefore, the system sensitivity can be decreased by reagent dilution. Reagent dilution has a negligible effect on the shape of the titration curve: the curve slope will appear larger if the same abscissa is used because only a portion of the original curve is displayed. The numbers along the abscissa must be multiplied by the ratio of the old to the new reagent concentration to show the entire original titration curve. For example, the abscissa values would have to be doubled if the reagent concentration were cut in half.

Properly designed dilution systems offer a variety of performance benefits. Most chemists use diluted reagents to make titration easier and more accurate. Because this is true for the lab, you can imagine how much more important it is in the field fraught with nonideal conditions. Dilution can reduce reagent valve plugging, reagent transportation delay, and reagent viscosity. It can prevent laminar flow and partially filled pipes and dramatically improve dispersion in a mixture. It also decreases the freezing point and winterization problem and corrosion for sodium hydroxide and plugging tendency. However, sulfuric acid becomes more corrosive when diluted.

If reagent dilution is used, the system must be carefully designed to prevent creating reagent concentration upsets and delivery delays. The pH controller should throttle the diluted reagent. The mass flow of water should be ratioed to the mass flow of reagent, and a density controller should trim the ratio. Coriolis flow meters should be used to improve the mass flow measurement reproducibility and provide an accurate density measurement for concentration control. In addition, one could utilize the temperature from the Coriolis meter to compensate for the density, particularly if the feed temperature is not constant. For steep titration curves and in-line pH control, a storage tank for a diluted reagent with a high recirculation flow should be installed to smooth out the fast reagent concentration disturbances from the dilution.

Dynamic simulations with piping and control system dynamics are needed to determine the best liquid reagent dilution design.

For much more knowledge, see the ISA book Advanced pH Measurement and Control Fourth Edition (use promo code ISAGM10 for a 10% discount on Greg’s ISA books).

Ask Greg McMillan - What role do you see dynamic simulation playing in the future of best liquid reagent delivery for pH control?

Ask Greg McMillan

We ask Greg:

What role do you see dynamic simulation playing in the future of best liquid reagent delivery for pH control?

Greg's Response:

If the reagent pipeline is partially filled or empty or a dip tube is backfilled with process fluid, the regent delivery delay becomes the biggest source of dead time in a pH loop for the small reagent flows commonly required for neutralization. Whenever a reagent control valve closes or a metering pump stops, the reagent continues to drain into the process, and process fluid can be forced back up into the reagent injection or dip tube. Even if hydraulics do not promote much drainage or backfilling of the dip tubes, ion migration from high to low concentrations will proceed until an equilibrium is reached between the concentrations in the reagent tube and the process volume. As a result, the pH will continue to be driven by the drainage and migration of reagent after the control valve closes or the metering pump stops. If the valve is closed or the pump is stopped for a long time, when the valve opens or the pump starts, it must flush out process components in the tubes before the reagent gets into the volume. The worst-case delivery time delay is the volume of the backfilled tube divided by the reagent flow. Because dip tubes are designed to be large enough to withstand agitation and the design standard for normal flows is to take the dip tube down toward the impeller, the reagent delivery delay can be several orders of magnitude larger than the turnover time.

If reagent piping is totally filled with a constant concentration of noncompressible liquid reagent, a change in valve position initiates a change in reagent flow within a second or two. An automated on-off isolation valve close-coupled to the process connection that closes when the control valve throttle position is below a reasonable minimum helps keep reagent lines pressurized and full of reagent. Injection of reagent into recirculation lines with a high flow rate just before entry into the vessel instead of via dip tubes offers a tremendous decrease in reagent delivery delay.

Dynamic simulations with piping and dip tube dynamics are needed to determine the best liquid reagent delivery design.

For much more knowledge, see the ISA book Advanced pH Measurement and Control Fourth Edition (use promo code ISAGM10 for a 10% discount on Greg’s ISA books).

Ask Greg McMillan - What role do you see dynamic simulation playing in the future of best reagent selection for pH control?

Ask Greg McMillan

We ask Greg:

What role do you see dynamic simulation playing in the future of best reagent selection for pH control?

Greg's Response:

For a reagent dose to be precise enough, the reagent’s logarithmic acid dissociation constant (pKa) should be close to the pH set point so that it tends to flatten the titration curve. The reagent viscosity should be low enough to ensure a fully turbulent flow which is important for consistent dosing. The reagent should be free from solids and slime because of the tiny control valve trims used. A fast reagent delivery requires that the reagent viscosity be low enough for the dose to start quickly and mix rapidly with the effluent. An extraordinary time delay has been observed for starting the flow of 98% sulfuric acid through an injection orifice because of its high viscosity. It has been compared to getting ketchup out of a bottle. Mixing an influent stream with a viscous reagent stream, such as 98% sulfuric acid or 50% sodium hydroxide that is about 40 times more viscous than the typical influent stream, is difficult and requires greater agitation intensity and velocity. A highly viscous reagent dose tends to travel as a glob through the mixture. Lastly, the reagent should be in the liquid phase.

The neutralization reaction of liquid components is essentially instantaneous once reagent streams are mixed with influent streams. Gas or solid reagents take seconds and minutes, respectively, to dissolve and get to actual liquid contact and mixing with the influent stream components. Reagent bubbles escape as a vapor flow when the bubble breakup time and gas dissolution time exceed the bubble rise time. Coating and plugging upstream control valves and downstream equipment from particles of unreacted reagent or precipitation of salts from reacted reagents can be so severe as to cause excessive equipment maintenance and downtime. Liquid reagents such as ammonia can choke the control valve from flashing in vena contracta or cause cavitation damage in the valve and the piping immediately downstream. Particles in the reagent can erode the valve trim. Some waste lime systems have rocks that can quickly tear up a valve seat and plug.

Dynamic simulations with representative physical properties and phases are needed to determine the best reagent.

For much more knowledge, see the ISA book Advanced pH Measurement and Control Fourth Edition (use promo code ISAGM10 for a 10% discount on Greg’s ISA books).

Ask Ron Besuijen - How does stress impact decision-making, and how can training help mitigate the physiological effects of stress?

Ask Ron Besuijen

We ask Ron:

How does stress impact decision-making, and how can training help mitigate the physiological effects of stress?

Ron's Response:

In the last pod we discussed how stress can impact performance and what are some of the physiological responses to stress.

In her book, The Unthinkable: Who Survives When Disaster Strikes, Amanda Ripley discusses the three stages people go through when responding to critical situations. The first can be Denial when we may try to normalize the event and try to fit the event into past patterns and experiences. The next is Deliberation when we like to form groups to discuss what is happening and get support. The last is the Decisive moment when we take action to respond to an event.

To get past the Denial step we can highlight the key parameters or indicators that a particular event has happened. For example, if a primary compressor trips and requires the facility to go to a safe park state, what emergency alarms will come in? Is there a speed indicator that can be referred to? Knowing the key indicators will help move the panel operator through denial quickly.

Deliberation can happen before an event during training. Discuss why the responses from a procedure are important and what impact they will have on the process. This discussion should happen before the response is practiced on the simulator. Sharing similar events or stories can also help to move through the Deliberation step faster.

Training and experience can help us move to the Decisive step faster and lessen the impact of an event. Simulator training is an excellent tool to minimize the effects of stress during critical incidents. Emergency procedures can be practiced which will allow an operator to put together the responses required to all the systems and how they can impact each other. A procedure is written in a linear fashion, the actual process is much more dynamic with many interacting systems.

A loss of containment and how to provide isolation can also be practiced on a simulator. This will lessen the stress responses if a similar event occurs and prepare operations to make key decisions for these events.

Even though an incident may not evolve the same way every time, training will develop pattern recognition skills that will help to minimize stress and the physiological responses.

Ask Greg McMillan - What role do you see dynamic simulation playing in the future of best control valve deign for pH control?

Ask Greg McMillan

We ask Greg:

What role do you see dynamic simulation playing in the future of best control valve deign for pH control?

Greg's Response:

Stick and slip generally occur together and have a common cause of friction in the actuator design, stem packing, and seating surfaces. Rotary valves with high temperature packing and tight shutoff (the so-called high-performance valve) exhibit the most stick-slip. Rotary valves tend to also have shaft windup where actuator shaft moves but the ball, disc, or plug does not move. It is much worse at positions less than 20% where the ball, disc, or plug is starting to rotate into the sealing surfaces. For sliding stem (globe) valves, the stick-slip increases below 10% travel as the plug starts to move into the seating ring. These problems are more deceptive and problematic in rotary valves because the smart positioner is measuring actuator shaft position and not the ball, disc, or plug stem. If there is stick-slip, the controller will never get to the set point and there will always be a limit cycle. The biggest culprits are low leakage classes and the big squeeze from graphite and environmental packing particularly when they are tightened without a torque wrench. A bigger actuator may help but does not eliminate the problem.

For the best throttling valves (globe valves with diaphragm actuators), the stick-slip is normally only about 0.1% and its effect is typically observable only in pH system trend recordings. The control valve resolution will clearly show up as a large sustained oscillation for a set point on the steep portion of the titration curve because of the high process gain. The extreme sensitivity of the pH process requires a valve resolution that goes well beyond the norm. The number of stages of equipment needed for neutralization may be dependent on the capability of the control valve. It is difficult to effectively use more than one control valve per stage. An extremely small and precise control valve is necessary to keep limit cycle within the control band. To achieve the large range of reagent addition and extreme precision required, several stages are used with the largest control valve on the first stage and the smallest control valve on the last stage.

Dynamic simulations with control valve resolution and lost motion included are needed to determine the best control valve and number of neutralization stages.

For much more knowledge, see the ISA book Advanced pH Measurement and Control Fourth Edition (use promo code ISAGM10 for a 10% discount on Greg’s ISA books).

Best Practices for Control Valve and Variable Frequency Drive (VFD) Design

The achievement of a fast and precise final control element is critical for pH control. The focus on control valve resolution and rangeability is an essential starting point but there are many other considerations. Also, variable frequency drives (VFDs) are often touted as offering tighter control but there are many design and installation choices made that can make this expectation unrealistic. To help us all to be aware of potential problems and recognized solutions, the following list of best practices is offered based on the content in Chapter 7 of the ISA book Essentials of Modern Measurements and Final Elements in the Process Industry.

- Use sizing software with physical properties for worst case operating conditions.

- Include effect of piping reducer factor on effective flow coefficient.

- Select valve location and type to eliminate or reduce damage from flashing.

- Preferably use a sliding stem valve (size permitting) to minimize backlash and stiction unless crevices and trim causes concerns about erosion, plugging, sanitation, or accumulation of solids particularly monomers that could polymerize and for single port valves install “flow to open” to eliminate bathtub stopper swirling effect.

- If a rotary valve is used, select diaphragm actuator with splined actuator shaft to stem connection, integral cast ball or disk stem, and minimal seal and packing friction to minimize lost motion deadband and resolution limitation.

- Use conventional Teflon packing, and for higher temperature ranges use Ultra Low Friction (ULF) Teflon packing, avoid overtightening of packing, and consider possible use of compatible stem lubricant.

- Compute the installed valve flow characteristic for worst case operating conditions.

- Size actuator to deliver more than 150% of the maximum torque or thrust required.

- Select actuator and positioner with threshold sensitivities of 0.1% or better.

- Ensure total valve assembly deadband is less than 0.4% over the entire throttle range.

- Ensure total valve assembly resolution is better than 0.2% over the entire throttle range.

- Choose inherent flow characteristic and valve to system pressure drop ratio that does not change the valve gain more than 4:1 over entire process operating point range and flow range.

- Tune the positioner aggressively (high proportional action gain) for application without integral action with readback that indicates actual plug, disk or ball travel instead of just actuator shaft movement.

- Never replace positioners with volume boosters. Instead put volume boosters on the positioner output to reduce valve 86% response time for large signal changes with booster bypass valve opened just enough to assure stability.

- Use small (0.2%) as well as large step changes (20%) to test valve 86% response time to see if changes need to be made to meet desired 86% response time.

- See ISA TR75.25.02 Annex A for more details on valve response and relaxing expectations on travel gain and 86% response time for small and large signal changes, respectively.

- Counterintuitively increase the PID gain to reduce oscillation period and/or amplitude from lost motion, stick-slip, and from poor actuator or positioner sensitivity.

- Use external-reset feedback detailed in Chapter 8 with accurate and fast valve position readback to stop oscillations from poor precision and slow response time.

- Use input and output chokes and isolation transformers to prevent EMI from the VFD inverter.

- Use PWM to reduce torque pulsation (cogging) at VFD low speeds.

- Use a VFD inverter duty motor with class F insulation and 1.15 service factor, and totally enclosed fan cooled (TEFC) motor with a constant speed fan or booster fan or totally enclosed water cooled (TEWC) motor in high temperature applications to prevent overheating.

- Use a NEMA Design B motor instead of Design A motor to prevent a steep VFD torque curve.

- Use bearing insulation or path to ground to reduce bearing damage from electronic discharge machining (EDM). Damage from EDM is worse for the 6-step voltage older VFD technology.

- Size the pump to prevent it from operating on the flat part of the VFD pump curve.

- Use a recycle valve to keep the VFD pump discharge pressure well above static head at low flow and a low-speed limit to prevent reverse flow for highest destination pressure.

- Use at least 12-bit signal input cards to improve the VFD resolution limit to 0.05% or better

- Use drive and motor with a generous amount of torque for the application so that speed rate-of-change limits in the VFD setup do not prevent changes in speed from being fast enough to compensate for the fastest possible disturbance.

- Minimize VFD deadband introduced into the drive configuration (often set in misguided attempt to reduce response to noise) causing delay and limit cycling.

- For VFD tachometer control, use magnetic or optical pickup with enough pulses per shaft revolution to meet the speed resolution requirement.

- For tachometer control, keep the speed control in the VFD to prevent cascade rule violation where the secondary speed loop is not 5 times faster than the primary process loop.

- To increase rangeability to 80:1, use fast cascade control of speed to torque in the VFD to provide closed loop slip control.

- Use external- reset feedback with accurate and fast speed readback to stop oscillations from poor VFD resolution and excessive deadband and rate limiting in VFD configuration.

- Use foil braided shield and armored cable for VFD output spaced at least one foot from signal wires never any crossing of signal wires, ideally via separate cable trays.

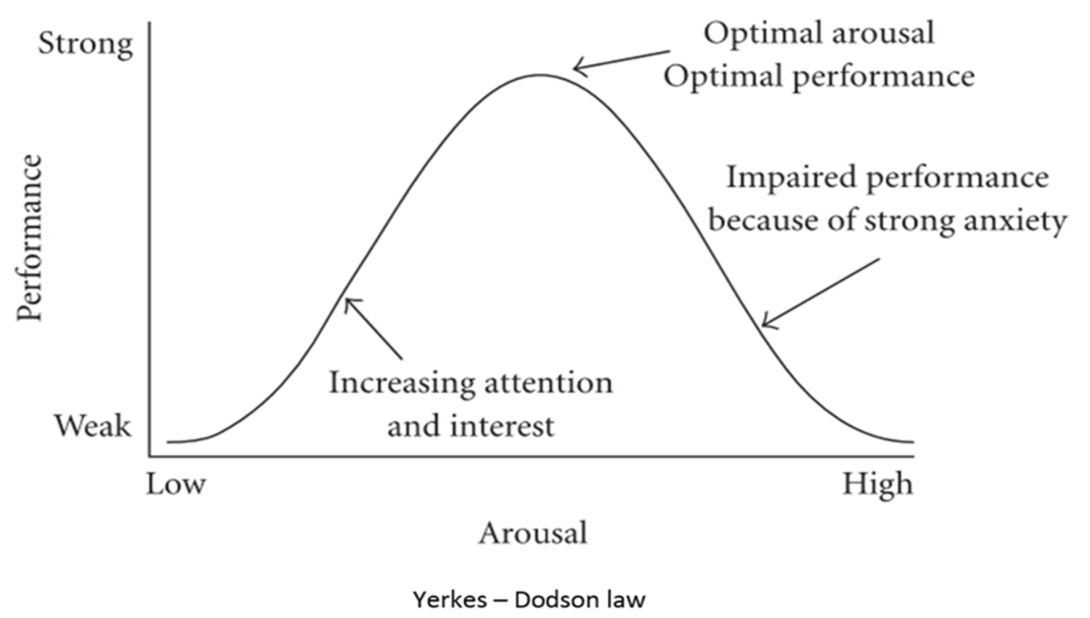

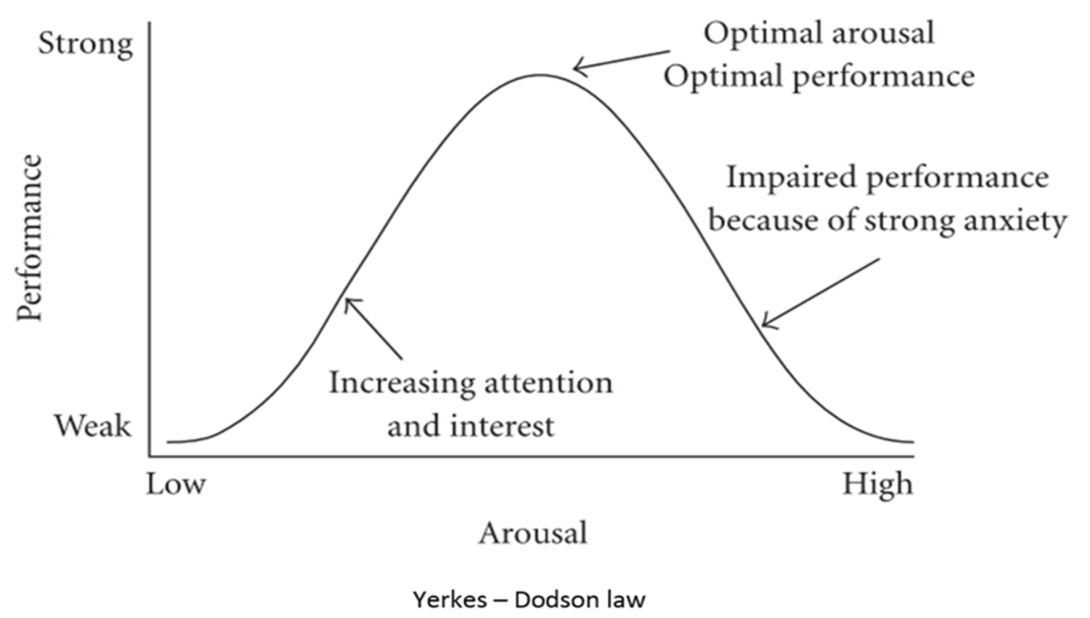

Ask Ron Besuijen - How can stress affect decision making and how training will reduce the physiological responses?

Ask Ron Besuijen

We ask Ron:

How can stress affect decision making and how training will reduce the physiological responses?

Ron's Response:

Capt. Chesley “Sully” Sullenberger was quoted as saying that the moments before the emergency water landing of US Airways Flight 1549 were "the worst sickening, pit-of-your-stomach, falling-through-the-floor feeling" that he had ever experienced. This was after a forced landing in the Hudson River because a bird strike shutdown both engines. I can relate to this feeling.

Referring to the above diagram we can see that not all stress is bad. We need some arousal to keep us interested. However, if the stress is too high it can impair our performance. What your peak performance is, can depend on your experience and training.

There can be physiological responses to high stress situations. The brain may revert to a primitive response which overrides higher functions. Time may seem to slow down or speed up. Tunnel vision may limit the ability to see the big picture. Tunnel hearing may occur or a loss of hearing. A feeling of detachment may be experienced or feelings of dissociation. Coordination may be affected or shaking hands my impair the use of a keyboard. Speech may be affected, either a loss or a shrill voice that may be hard to understand. Most People do not normally panic. They are more likely to freeze and minimize the severity of the event.

In the next pod we will discuss how we respond to critical situations and how we can train and prepare for them.

Ask Greg McMillan - What role do you see dynamic simulation playing in the future of best static mixer use for pH control?

Ask Greg McMillan

We ask Greg:

What role do you see dynamic simulation playing in the future of best static mixer use for pH control?

Greg's Response:

Static mixer have noisy pH measurements. Signal filtering can help but a downstream volume is needed to provide smoothing of pH oscillations that can be larger than 6 pH for steep titration curves.

Static mixers have motionless internal elements that subdivide and recombine the flow stream repeatedly and cause rotational circulation to provide radial mixing of the stream but very little axial or back mixing. Consequently, fluctuations in pH over the cross section are smoothed, but fluctuations in pH with time, which are axial, show up unattenuated in the discharge. The equipment dead time is about 80% of the residence time for a static mixer, and most manufacturers are working toward making their static mixers exhibit plug flow to reduce the residence time distribution. Although this is beneficial for many chemical reactions, it makes the discharge pH more likely to oscillate, spike, and violate constraints.

Flow pulses from a positive displacement reagent pump and drops associated with a high-viscosity reagent or low reagent velocity will not be back mixed and will cause a noisy pH signal. Bubbles from a gaseous reagent will also cause a noisy pH signal because the residence time is not sufficient for complete reagent dissolution. Although a static mixer has a poor dead-time-to-time-constant ratio that tends to make a pH loop oscillate, it offers the significant advantage of a small magnitude of dead time and a small volume of off-spec material from a load upset. Also, the reagent injection delay for close coupled reagent valves is much less than for vessels.

The fast correction and small dead time mean that a static mixer used in conjunction with a volume can eliminate the need for a well-mixed vessel. The static mixer can be on the feed or recirculation line of a volume. pH waste treatment systems use static mixers in series separated by volumes. The volumes may simply use an eductor to provide some mixing for smoothing of the oscillations.

Dynamic simulations with mixing nonuniformity and dead time can show the best use of static mixers in conjunction with vessels.

For much more knowledge, see the ISA book Advanced pH Measurement and Control Fourth Edition (use promo code ISAGM10 for a 10% discount on Greg’s ISA books).

Best Practices for pH Equipment Design

Equipment and the associated piping are the most frequent and largest sources of loop dead time. The lack of understanding of how mixing profiles and especially the fundamental concept of back mixing and the transportation delay of reagent into the mixture, and the transportation delay of changes in process pH appearing at the electrode are the biggest detriments to pH control system performance, assuming a healthy electrode and precise final control element. To minimize these problems, the distances to and from the mixture are minimized, the axial agitator pumping rate in vessels for back-mixing is maximized, and dead zones are minimized. Strategically employing vessels and static mixers in various pH control system designs and the importance of minimizing dead time will be detailed in future posts on pH control system design.

- In equipment where a pH control system injects reagent, minimize the dead time contribution to the loop dead time from mixing and transportation delays to less than 6 s.

- Use large volumes susceptible to large dead times upstream or downstream of the pH control system to average out pH changes for smoother pH control and less reagent consumption, particularly by upstream volumes where influent pH swings above and below the pH set point.

- If large volumes exist downstream for smoothing pH fluctuations, a static mixer with a close-coupled reagent injection to the mixer inlet and electrodes in the pipeline about 20 pipe diameters downstream of the mixer outlet is an option for fast pH control to minimize new equipment cost.

- Use static mixers in the feed or recirculation line of the vessel to premix influent and reagent added to the static mixer inlet to minimize mixing and injection delays.

- Use impellers designed for axial agitation in vessels where a pH control system injects reagent. Keep the vessel diameter-to-height ratio between 0.5 and 1.5 and the agitator pumping rate to give a turnover time (mixing dead time) significantly less than 6 s.

- In vessels where a pH control system injects reagent, use baffles in the well-mixed vessels to prevent dead zones and promote an axial agitation flow pattern.

Ask Ron Besuijen - Why do we need to justify the investment in developing and maintaining successful training programs?

Ask Ron Besuijen

We ask Ron:

Why do we need to justify the investment in developing and maintaining successful training programs?

Ron's Response:

Developing a simulator program involves a financial commitment and resources to be successful. This continues after the initial installation of the facility. One of the first questions asked when considering a simulator facility is the cost and how it can be justified. It is a business decision like any other new improvement to the process.

There are several ways to justify a simulator program. I believe the largest is operator competency. To be blunt, no one wants their company to be part of a Chemical Safety Board bulletin. This does not mean that it was operations that caused the incident, it means that if they must respond to an evolving situation and if they are more than just qualified, they may be able to prevent an incident from escalating. These types of incidents involve the loss of life, hundreds of millions of dollars of production losses, and equipment damages. It is difficult for someone who has not worked a control panel during an upset to imagine how quickly you can become cognitively overloaded.

To maintain competency tasks must be practiced periodically. Reliability improvements and the time between major maintenance shutdowns being extended can leave operations rusty in executing these tasks. A simulator recertification program will ensure operations is prepared to respond to upsets and safely handle shutdowns.

Simulator programs have become a key instrument in improving our environmental performance and reducing flaring. This is a natural extension of on-stream time. Shutdowns and startups require flaring. Strategies to reduce flaring can be trialed on a simulator. Several approaches can be tested and refined while measuring the amount of flaring required. Safe Park applications can be developed and tested with a simulator with flaring reductions in mind.

Advanced control systems can be developed and tested on a simulator. There are always challenges implementing these systems that are difficult to anticipate in our dynamic processes. Flushing these out on a simulator reduces process upsets and flaring. The run time of these control systems can also be improved by training the operators on their function and how to troubleshoot problems.

Control system software updates can be tested on a simulator. This not only highlights errors, but it also gives the control technicians a chance to train and perfect the software roll out. Major process upsets have occurred from control system software updates. Of course, the most effective testing is when the simulator system as close to the live system as possible.

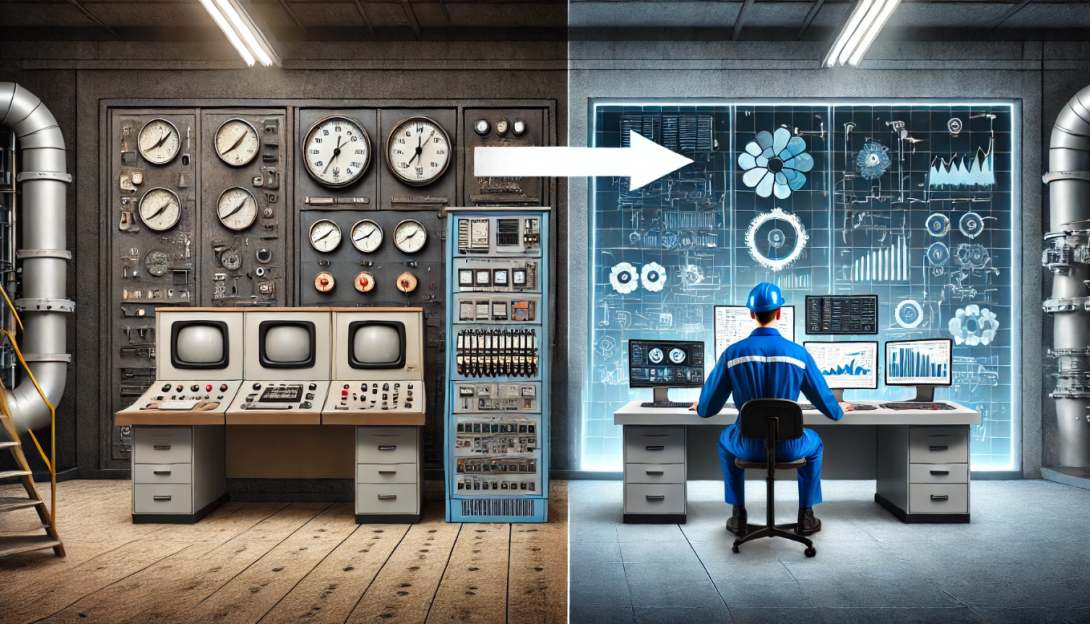

Periodically the control system is replaced with new hardware and software. This is a very disruptive period for operations as it typically involves new graphics and interfaces. These can be developed and tested on a simulator to minimize the errors in the diagrams and the links from the objects. Operations can also be trained on the new graphics to complete routine tasks and to manage upsets. New graphics can considerably slow down even an experienced operator until they learn where to find everything.

There are many ways to justify a simulator program. In many facilities if you can increase your onstream time by 1% the program will more than pay for itself.

Ask Greg McMillan - What role do you see dynamic simulation playing in the future of best vessel design for pH control?

Ask Greg McMillan

We ask Greg:

What role do you see dynamic simulation playing in the future of best vessel design for pH control?

Greg's Response:

pH systems are extremely sensitive to the vessel design. A poor design can make good pH control impossible in nearly all systems despite the use of the most advanced control techniques.

In vessels, there are many different internal flow patterns from agitation and many different parameters to quantify the amount of agitation. For pH control, the flow pattern should be axial, where the fluid is pulled down from the top near the shaft, circulated along the bottom to the sidewalls, and pulled back up to the top near the sidewalls. If the agitation breaks the surface without froth, its intensity level is in the ballpark for good pH control. The pattern is called axial because of the vertical up-and-down flow pattern parallel to the axis of the shaft. Baffles that are 90 degrees apart, extend vertically along the entire length of the sidewall, and are one-sixtieth of the diameter in width are recommended because they help establish the vertical flow currents to reduce vertexing, swirling, and air induction from the surface, plus they increase the uniformity of the flow pattern. Propeller and pitched blade turbines provide an axial flow pattern. A double-spiral blade and a tangential jet nozzle cause an undesirable corkscrew axial pattern because the concentration change has a long and slow corkscrew flow path.

For a vessel with axial agitation, baffles, and a liquid height that is about the same as the diameter, the equipment dead time is approximately the turnover time that can be estimated as the liquid mass divided by the summation of the influent, reagent, and recirculation flows plus the agitator pumping rate. If the ratio of the equipment dead time to the time constant is equal to or less than 0.05, the vessel is classified as a vertical well-mixed tank. Horizontal tanks have a length much greater than the height. No matter how many agitators are installed, the complete volume cannot be considered as axially mixed. There will be regions of stagnation, short-circuiting, and plug flow.

Dynamic simulations with mixing nonuniformity and dead time can show the importance of a vertical well-mixed tank.

For much more knowledge, see the ISA book Advanced pH Measurement and Control Fourth Edition (use promo code ISAGM10 for a 10% discount on Greg’s ISA books).

Ask Aaron Crews - Where does the return come from on a DCS replacement project?

Ask Aaron Crews

We ask Aaron:

You’ve mentioned the desire to avoid “replacement in kind” and to deliver ROI a couple of times. It’s not intuitive where that return would come from on a DCS replacement project – where is the value? What’s the role for simulation there?

Aaron's Response:

When we talk about ROI in a modernization, we are really talking about enabling the ROI of automation, given a modern DCS. This requires a change in automation approach but can deliver big returns.

The primary value categories fall into the following:

- Throughput

- Bringing the process up to its level of expected performance

- Safety

- Utilizing automation to expose fewer people to the process

- Automated handling of abnormal situations or infrequent situations

- Reducing probability of incidents due to human error

- Consistency

- Optimal control strategies and operating/tuning parameters under any given condition

- Digitalization

- Asset optimization and insight through the DCS to plant personnel

- A Path for data analytics to empower enhanced decisions.

Unfortunately, reaching these goals often requires a more modern DCS than what is installed. Beyond that, that DCS needs to be instrumented and automated beyond what is typically seen – especially in industries outside of perhaps life sciences and modern specialty chemical installations.

To deliver on the potential value here, simulation plays an important role. It mitigates risk by allowing for development, testing, and iteration on automation without process impact. This is key for driving production throughput and improved safety. And for those consistency applications, simulation can help with the development of state-based or dynamic control strategies as well as to train the operators through those varying operating conditions and state transitions. The interface between the process and operations post-optimization may be very different than before. Done right, this improved automation can deliver major improvements.

Some benchmarks we have seen include:

- 5% increased equipment capacity

- 5% Energy and utilities reduction

- .5% Yield improvements (savings through reduced feedstock)

- 20% Reduction in transition times between products/grades

- 20% Reduction in off-spec product

- 10% Reduction in abnormal events

- 5% Reduction in reduced undesired byproducts

- 20% Reduction in re-blending costs

- 10% Reduction in inventories

- 20% Reduction in unscheduled maintenance

- 1% Reduction in unscheduled downtime

Applied to a large process, these benefits can be massive – certainly worth the investment in automation, including dynamic process simulation.

Ask Ron Besuijen - Why is it important to understand the relationship and interaction between the human and machine for training?

Ask Ron Besuijen

We ask Ron:

Why is it important to understand the relationship and interaction between the human and machine for training?

Ron's Response:

Several years ago, Gary Klein and Joseph Borders completed a study of operators’ mental models that involved watching how they navigated through process upsets on a simulator (This was through the Center for Operator Performance). From this, they developed the Mental Model Matrix. The matrix explores the relationship between the user and the system, as well as the capabilities and limitations of each system.

Operators must understand how the system was designed i.e. process theory and they must also understand how to make the system work in a dynamic environment. You may believe these are the same things, but they are not. Without going into too many details, I can think of several instances where operations have compensated for poor design or unintended consequences when the process is off-normal operating conditions or in a startup. It is important to design these quirks into a training simulator so the operators can be accustomed to them.